Manage VantageCloud Lake Compute Clusters with Apache Airflow

Overview

This tutorial demonstrates the process of utilizing the Teradata Airflow Compute Cluster Operators to manage VantageCloud Lake compute clusters. The objective is to execute dbt transformations defined on jaffle_shop dbt project through VantageCloud Lake compute clusters.

Use The Windows Subsystem for Linux (WSL) on Windows to try this quickstart example.

Prerequisites

- Ensure you have the necessary credentials and access rights to use Teradata VantageCloud Lake.

- Python 3.8, 3.9, 3.10 or 3.11 and python3-env, python3-pip installed.

- Linux

- WSL

- macOS

Run in Powershell:

Refer Installation Guide if you face any issues.

Install Apache Airflow and Astronomer Cosmos

-

Create a new python environment to manage airflow and its dependencies. Activate the environment:

RemarqueThis will install Apache Airflow as well.

-

Install the Apache Airflow Teradata provider

-

Set the AIRFLOW_HOME environment variable.

Install dbt

-

Create a new python environment to manage dbt and its dependencies. Activate the environment:

-

Install

dbt-teradataanddbt-coremodules:

Create a database

A database client connected to VantageCloud Lake is needed to execute SQL statements. Vantage Editor Desktop, or dbeaver can be used for this purpose.

Let's create the jaffle_shop database in the VantageCloud Lake instance with TD_OFSSTORAGE as default.

Create a database user

A database client connected to VantageCloud Lake is needed to execute SQL statements. Vantage Editor Desktop, or dbeaver can be used to execute CREATE USER query.

Let's create a lake_user user in the VantageCloud Lake instance.

Grant access to user

A database client connected to VantageCloud Lake is needed to execute SQL statements. Vantage Editor Desktop, or dbeaver can be used to execute GRANT ACCESS queries.

Let's provide the required privileges to the user lake_user to manage compute clusters.

Setup dbt project

- Clone the jaffle_shop repository and cd into the project directory:

- Make a new folder, dbt, inside $AIRFLOW_HOME/dags folder. Then, copy/paste jaffle_shop dbt project into $AIRFLOW_HOME/dags/dbt directory

Configure Apache Airflow

-

Switch to virtual environment where Apache Airflow was installed at Install Apache Airflow and Astronomer Cosmos

-

Configure the listed environment variables to activate the test connection button, preventing the loading of sample DAGs and default connections in Airflow UI.

-

Define the path of jaffle_shop project as an environment variable

dbt_project_home_dir. -

Define the path to the virtual environment where dbt-teradata was installed as an environment variable

dbt_venv_dir.RemarqueYou might need to change

/../../to the specific path where thedbt_envvirtual environment is located.

Start Apache Airflow web server

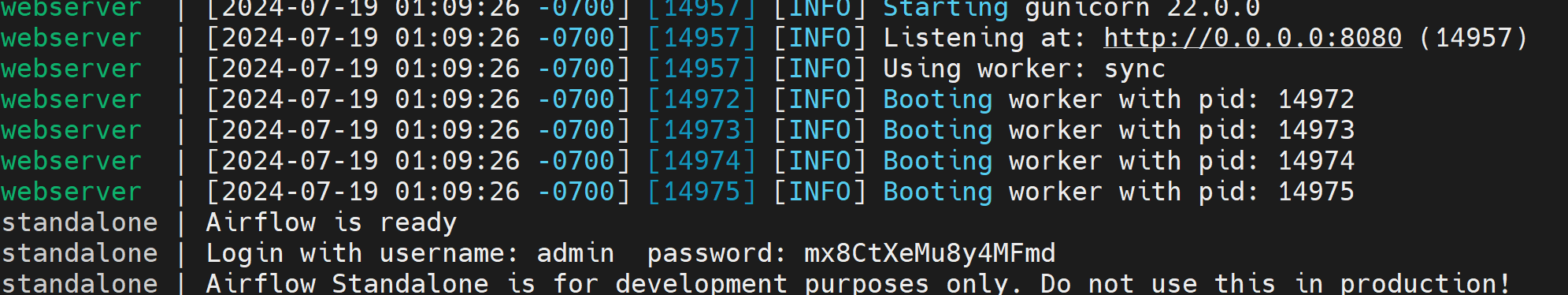

- Run airflow web server

- Access the airflow UI. Visit https://localhost:8080 in the browser and log in with the admin account details shown in the terminal.

Define a connection to VantageCloud Lake in Apache Airflow

- Click on Admin - Connections

- Click on + to define new connection to Teradata VantageCloud Lake instance.

- Define new connection with id

teradata_lakewith Teradata VantageCloud Lake instance details.- Connection Id: teradata_lake

- Connection Type: Teradata

- Database Server URL (required): Teradata VantageCloud Lake instance hostname or IP to connect to.

- Database: jaffle_shop

- Login (required): lake_user

- Password (required): lake_user

Define DAG in Apache Airflow

Dags in airflow are defined as python files. The dag below runs the dbt transformations defined in the jaffle_shop dbt project using VantageCloud Lake compute clusters. Copy the python code below and save it as airflow-vcl-compute-clusters-manage.py under the directory $AIRFLOW_HOME/files/dags.

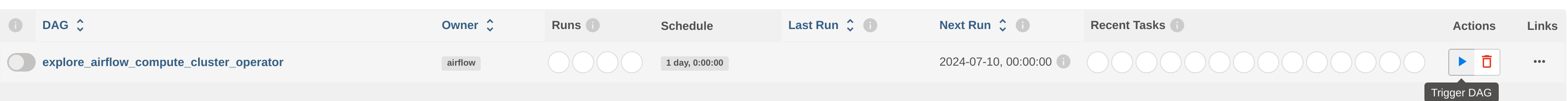

Load DAG

When the dag file is copied to $AIRFLOW_HOME/dags, Apache Airflow displays the dag in UI under DAGs section. It will take 2 to 3 minutes to load DAG in Apache Airflow UI.

Run DAG

Run the dag as shown in the image below.

Summary

In this quick start guide, we explored how to utilize Teradata VantageCloud Lake compute clusters to execute dbt transformations using Teradata compute cluster operators for Airflow.