Data Transfer from Azure Blob to Teradata Vantage Using Apache Airflow

Overview

This document provides instructions and guidance for transferring data in CSV, JSON and Parquet formats from Microsoft Azure Blob Storage to Teradata Vantage using the Airflow Teradata Provider and the Azure Cloud Transfer Operator. It outlines the setup, configuration and execution steps required to establish a seamless data transfer pipeline between these platforms.

Use The Windows Subsystem for Linux (WSL) on Windows to try this quickstart example.

Prerequisites

- Access to a Teradata Vantage instance, version 17.10 or higher.

注記

If you need a test instance of Vantage, you can provision one for free at https://clearscape.teradata.com

- Python 3.8, 3.9, 3.10 or 3.11 and python3-env, python3-pip installed.

- Linux

- WSL

- macOS

Refer Installation Guide if you face any issues.

Install Apache Airflow

-

Create a new python environment to manage airflow and its dependencies. Activate the environment.

-

Install the Apache Airflow Teradata provider package and the Microsoft Azure provider package.

-

Set the AIRFLOW_HOME environment variable.

Configure Apache Airflow

-

Switch to the virtual environment where Apache Airflow was installed at Install Apache Airflow

-

Configure the listed environment variables to activate the test connection button, preventing the loading of sample DAGs and default connections in the Airflow UI.

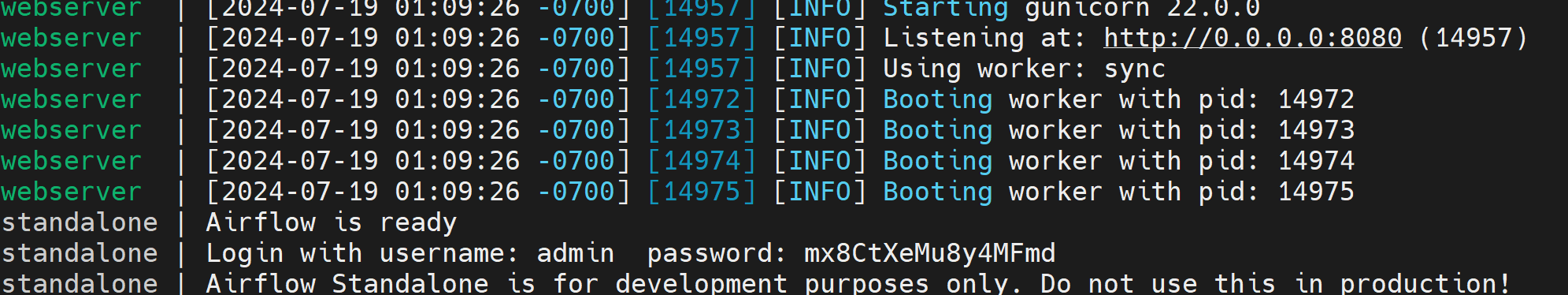

Start the Apache Airflow web server

-

Run airflow's web server

-

Access the airflow UI. Visit https://localhost:8080 in the browser and log in with the admin account details shown in the terminal.

Define the Apache Airflow connection to Vantage

- Click on Admin - Connections

- Click on + to define a new connection to a Teradata Vantage instance.

- Assign the new connection the id

teradata_defaultwith Teradata Vantage instance details.- Connection Id: teradata_default

- Connection Type: Teradata

- Database Server URL (required): Teradata Vantage instance hostname to connect to.

- Database: database name

- Login (required): database user

- Password (required): database user password

Refer Teradata Connection for more details.

Define DAG in Apache Airflow

DAGs in airflow are defined as python files. The DAG below transfers data from Teradata-supplied public blob containers to a Teradata Vantage instance. Copy the python code below and save it as airflow-azure-to-teradata-transfer-operator-demo.py under the directory $AIRFLOW_HOME/dags.

This DAG is a very simple example that covers:

- Droping the destination table if it exists

- Transfer of the data stored in object storage

- Get the number of transferred records

- Write the number of transferred records to the log

Refer Azure Blob Storage To Teradata Operator for more information on Azure Blob Storage to Teradata Transfer Operator.

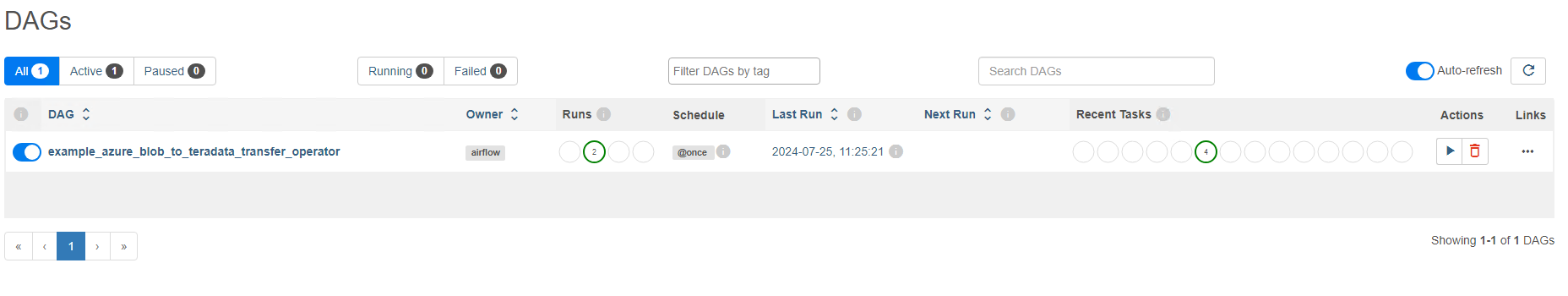

Load DAG

When the DAG file is copied to $AIRFLOW_HOME/dags, Apache Airflow displays the DAG in the UI under the DAGs section. It will take 2 to 3 minutes to load the DAG in the Apache Airflow UI.

Run DAG

Run the dag as shown in the image below.

Transfer data from Private Blob Storage Container to Teradata instance

To successfully transfer data from a Private Blob Storage Container to a Teradata instance, the following prerequisites are necessary.

-

An Azure account. You can start with a free account.

-

Create an Azure storage account

-

Create a blob container under Azure storage account

-

Upload CSV/JSON/Parquest format files to blob container

-

Create a Teradata Authorization object with the Azure Blob Storage Account and the Account Secret Key

注記Replace

AZURE_BLOB_ACCOUNT_SECRET_KEYwith Azure storage accountazuretestquickstartaccess key -

Modify

blob_source_keywith YOUR-PRIVATE-OBJECT-STORE-URI intransfer_data_csvtask and addteradata_authorization_namefield with Teradata Authorization Object name

Summary

This guide details the utilization of the Airflow Teradata Provider’s Azure Cloud Transfer Operator to seamlessly transfer CSV, JSON, and Parquet data from Microsoft Azure Blob Storage to Teradata Vantage, facilitating streamlined data operations between these platforms.