Connect Azure Data Share to Teradata Vantage

Overview

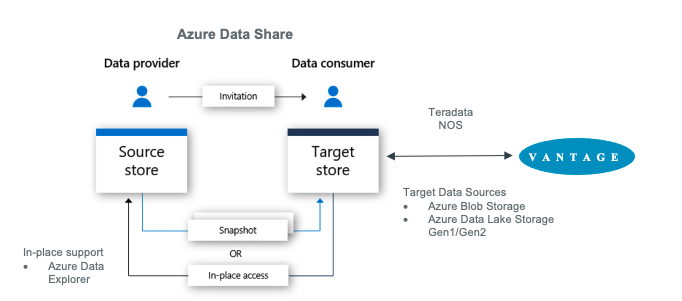

This article describes the process to share an Azure Blob Storage dataset from one user to another using Azure Data Share service and then query it with Teradata Vantage leveraging Native Object Store (NOS) capability. We will create and use a storage account and data share account for both users.

This is a diagram of the workflow.

About Azure Data Share

Azure Data Share enables organizations to simply and securely share data with multiple customers and partners. Both the data provider and data consumer must have an Azure subscription to share and receive data. Azure Data Share currently offers snapshot-based sharing and in-place sharing. Today, Azure Data Share supported data stores include Azure Blob Storage, Azure Data Lake Storage Gen1 and Gen2, Azure SQL Database, Azure Synapse Analytics and Azure Data Explorer. Once a dataset share has been sent using Azure Data Share, the data consumer is able to receive that data in a data store of their choice like Azure Blob Storage and then use Teradata Vantage to explore and analyze the data.

For more information see documentation.

About Teradata Vantage

Vantage is the modern cloud platform that unifies data warehouses, data lakes, and analytics into a single connected ecosystem.

Vantage combines descriptive, predictive, prescriptive analytics, autonomous decision-making, ML functions, and visualization tools into a unified, integrated platform that uncovers real-time business intelligence at scale, no matter where the data resides.

Vantage enables companies to start small and elastically scale compute or storage, paying only for what they use, harnessing low-cost object stores and integrating their analytic workloads.

Vantage supports R, Python, Teradata Studio, and any other SQL-based tools. You can deploy Vantage across public clouds, on-premises, on optimized or commodity infrastructure, or as-a-service.

Teradata Vantage Native Object Store (NOS) can be used to explore data in external object stores, like Azure Blob Storage, using standard SQL. No special object storage-side compute infrastructure is required to use NOS. You can explore data located in an Blob Storage container by simply creating a NOS table definition that points to your container. With NOS, you can quickly import data from Blob Storage or even join it other tables in the database.

Alternatively, the Teradata Parallel Transporter (TPT) utility can be used to import data from Blob Storage to Teradata Vantage in bulk fashion. Once loaded, data can be efficiently queried within Vantage.

For more information see documentation.

Prerequisites

- Access to a Teradata Vantage instance.

노트

If you need a test instance of Vantage, you can provision one for free at https://clearscape.teradata.com

- An Azure account. You can start with a free account.

- An Azure Blob Storage account to store the dataset

Procedure

Once you have met the prerequisites, follow these steps:

- Create a Azure Blob Storage account and container

- Create a Data Share Account

- Create a share

- Accept and receive data using Data Share

- Configure NOS access to Blob Storage

- Query the dataset in Blob Storage

- Load data from Blob Storage into Vantage (optional)

Create an Azure Blob Storage Account and Container

-

Open the Azure portal in a browser (Chrome, Firefox, and Safari work well) and follow the steps in create a storage account in a resource group called myProviderStorage_rg in this article.

-

Enter a storage name and connectivity method. We will use myproviderstorage and public endpoint in this article.

We suggest that you use the same location for all services you create.

-

Select Review + create, then Create.

-

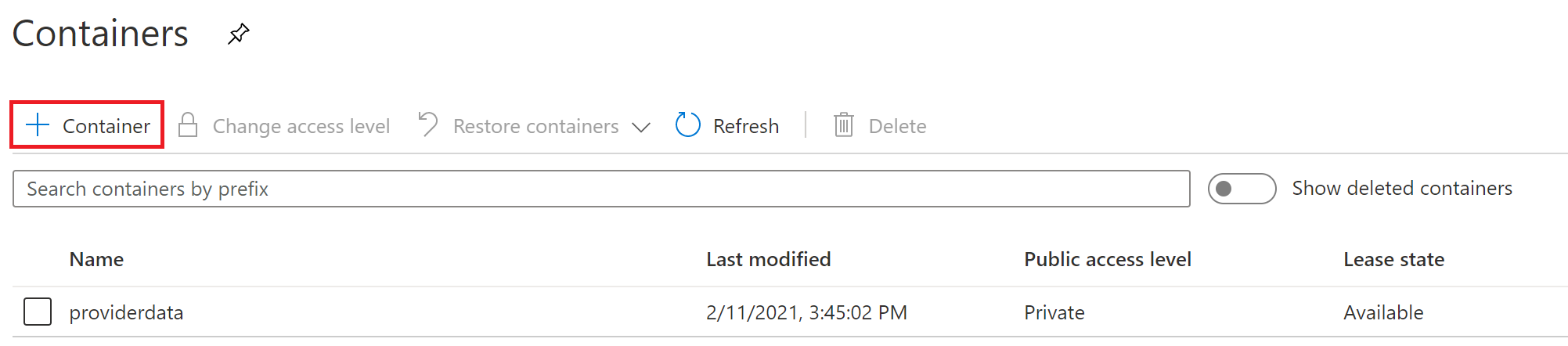

Go to resource and click Containers to create container.

-

Click the + Container button.

-

Enter a container name. We will use providerdata in this article.

-

Click Create.

Create a Data Share Account

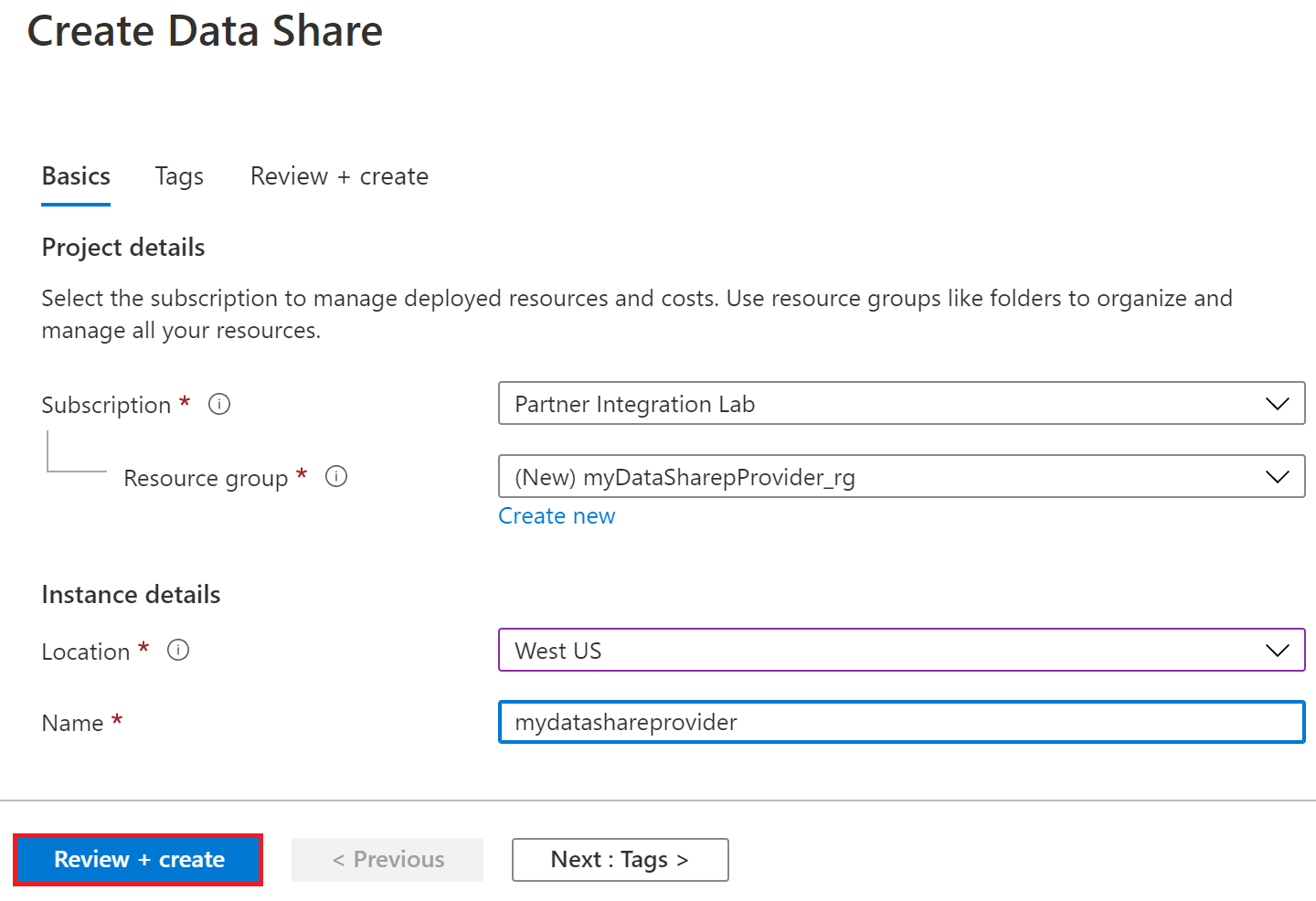

We will create a Data Share account for the provider sharing the dataset.

Follow the Create an Azure Data Share Account steps to create resource in a resource group called myDataShareProvider_rg in this article.

- In Basics tab, enter a data share account name. We will use mydatashareprovider in this article.

We suggest that you use the same location for all services you create.

-

Select Review + create, then Create.

-

When the deployment is complete, select Go to resource.

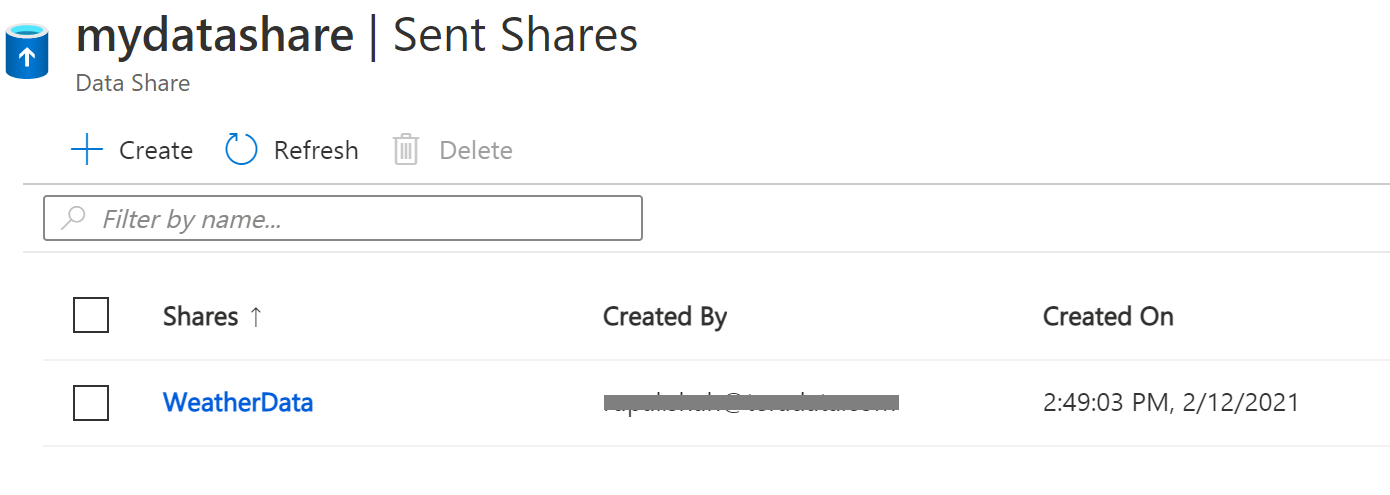

Create a Share

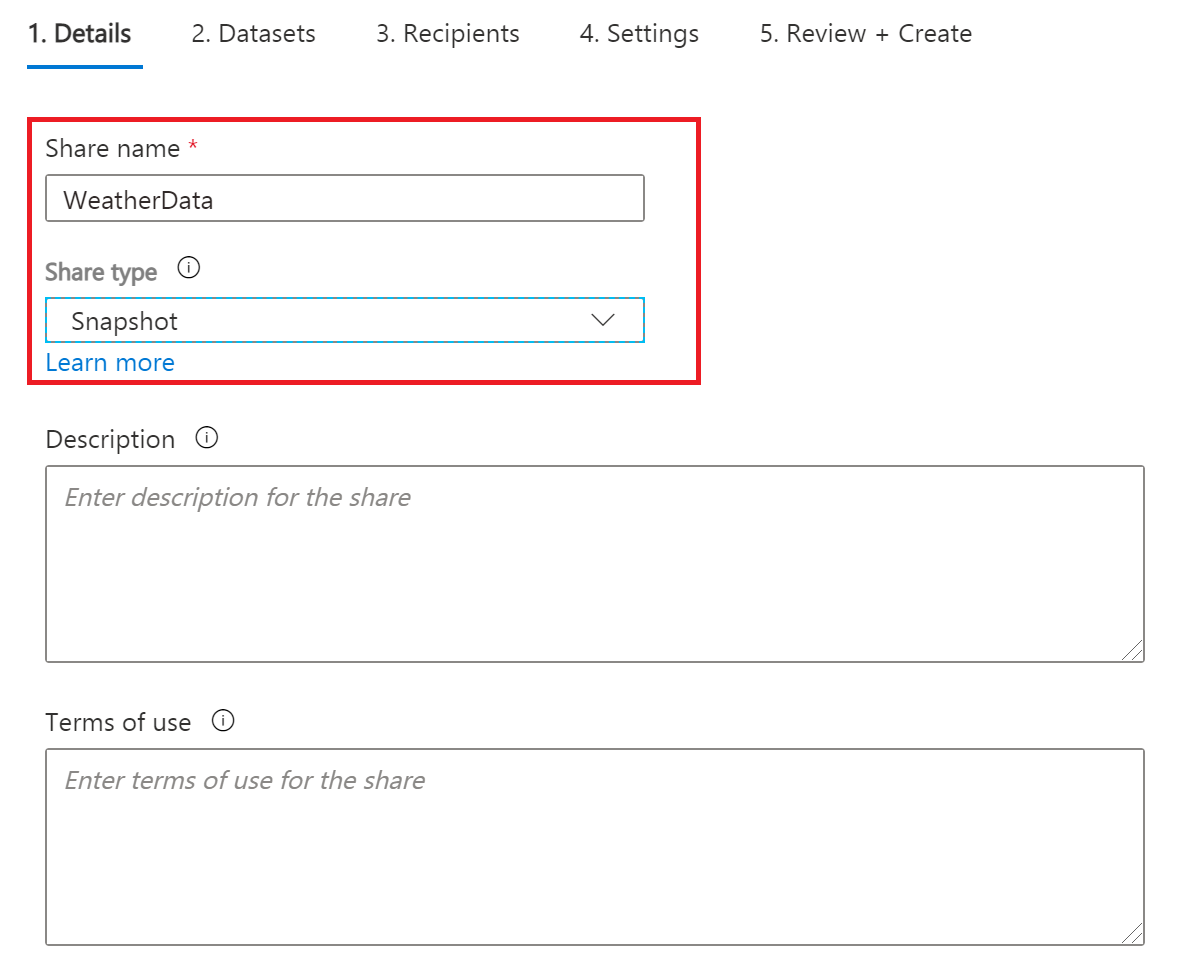

-

Navigate to your Data Share Overview page and follow the steps in Create a share.

-

Select Start sharing your data.

-

Select + Create.

-

In Details tab, enter a share name and share type. We will use WeatherData and Snapshot in this article.

Choose snapshot sharing to provide copy of the data to the recipient.

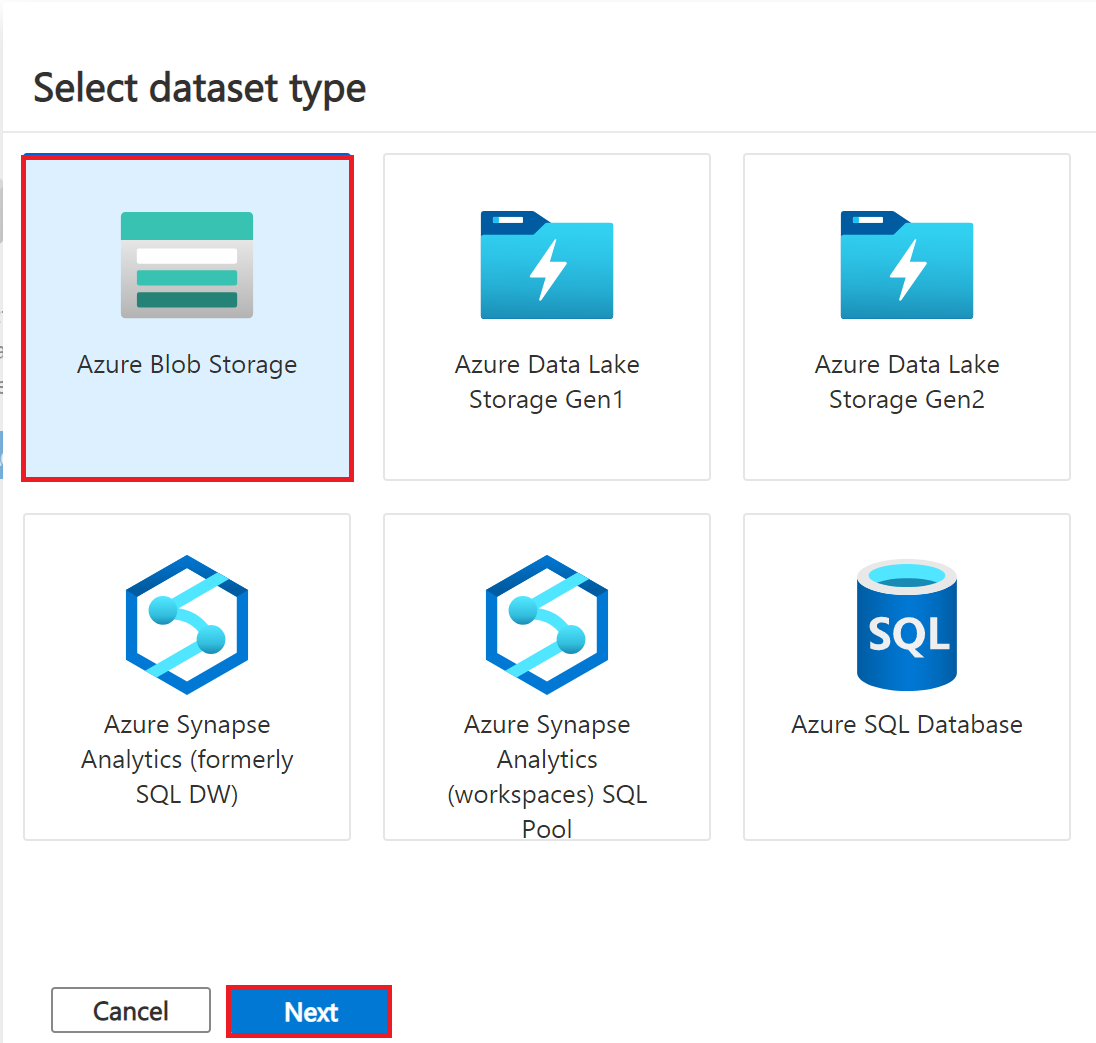

Supported data store: Azure Blob Storage, Azure Data Lake Storage Gen1, Azure Data Lake Storage Gen2, Azure SQL Database, Azure Synapse Analytics (formerly SQL DW)

Choose in-place sharing to provide access to data at its source.

Supported data store: Azure Data Explorer

-

Click Continue.

-

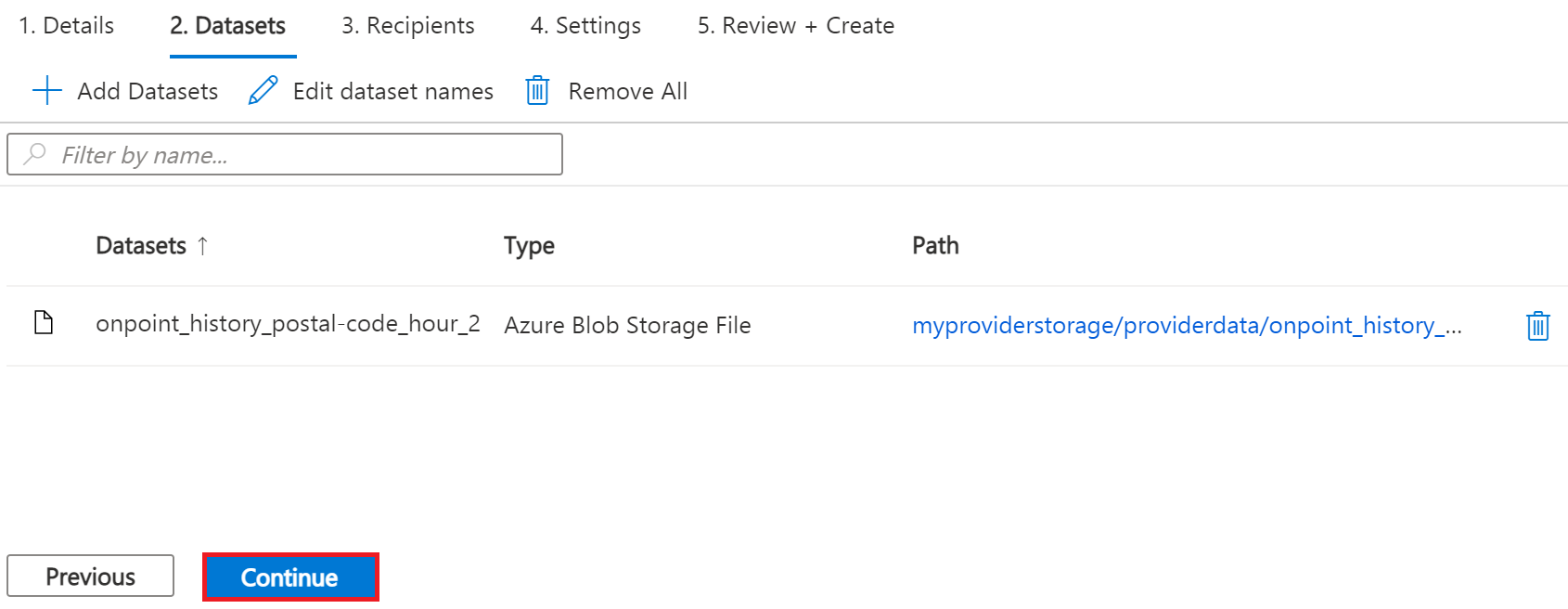

In Datasets tab, click Add datasets

-

Select Azure Blob Storage

-

Click Next.

-

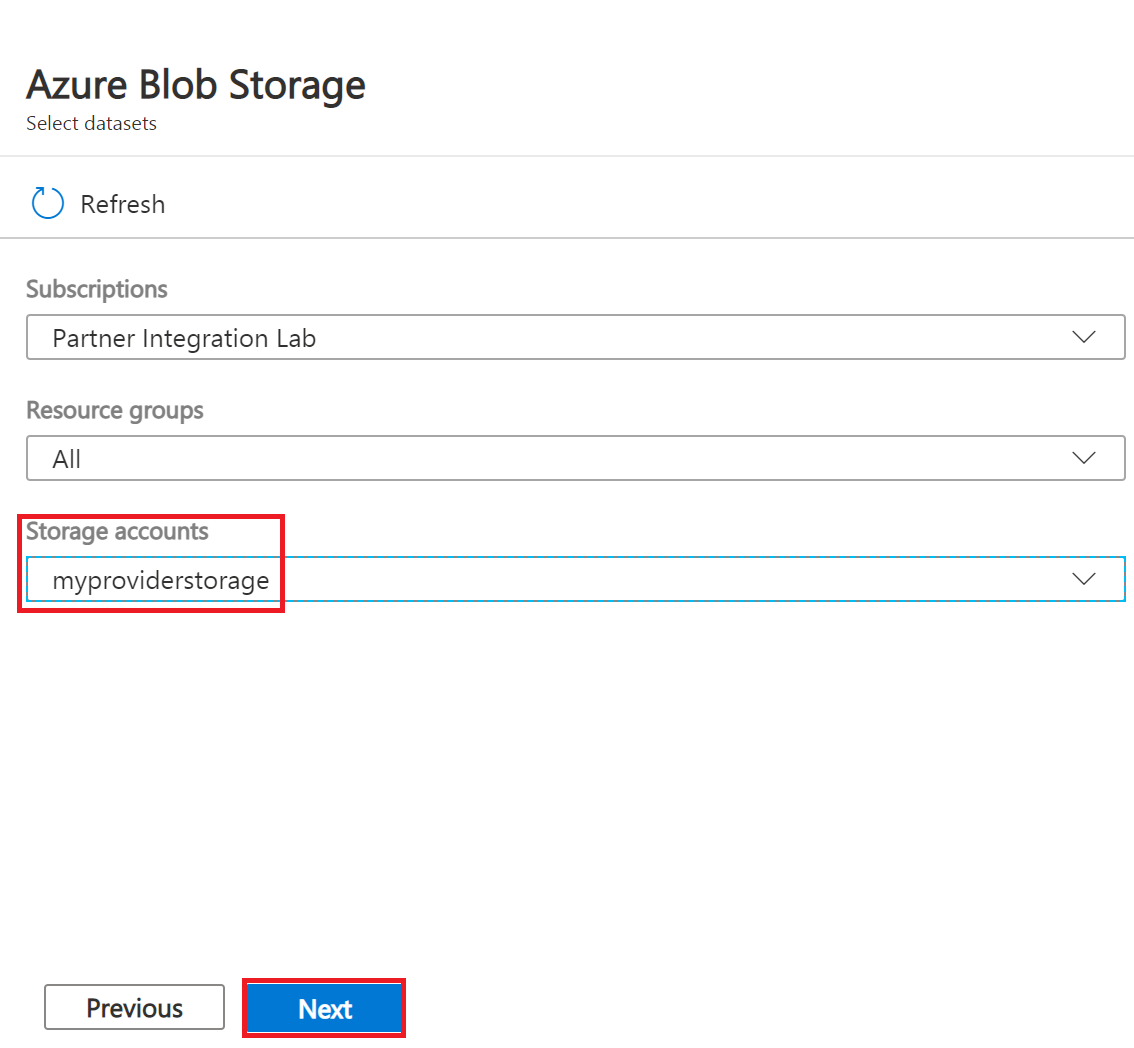

Enter Storage account providing the dataset. We will use myproviderstorage in this article.

-

Click *Next.

-

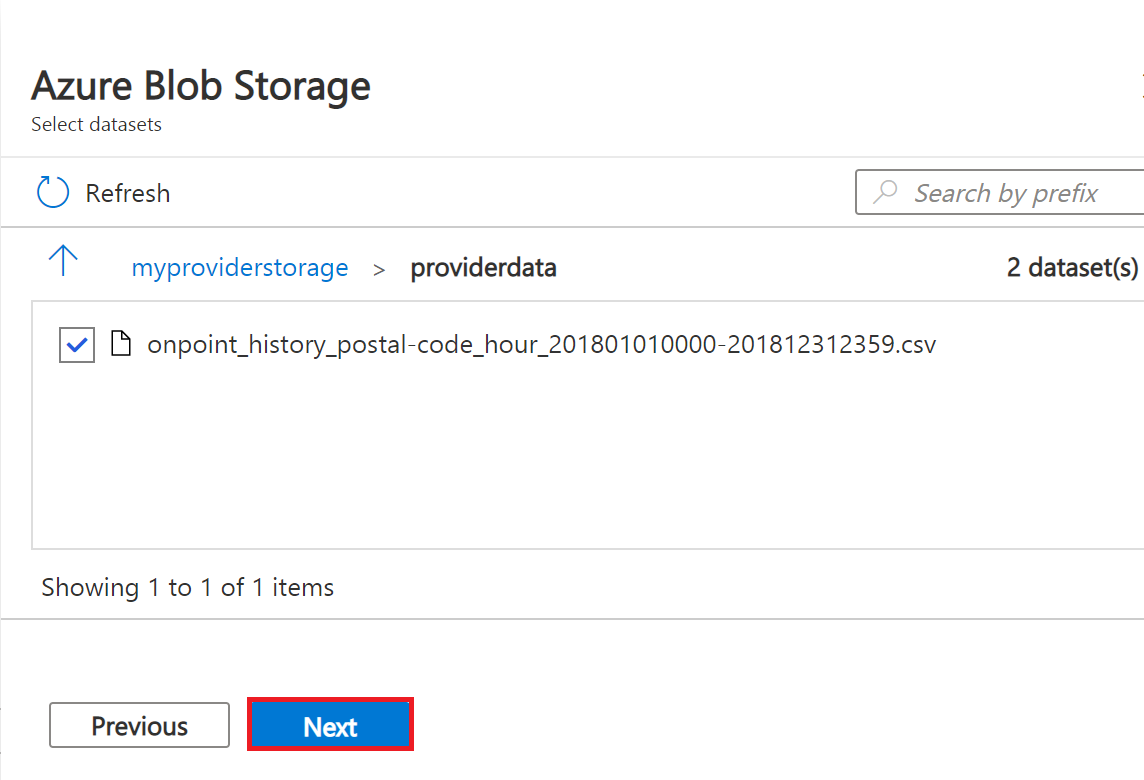

Double-click container to choose the dataset. We will use providerdata and onpoint_history_postal-code_hour.csv file in this article.

Figure 6 Select Storage container and dataset

Azure Data Share can share at the folder and file level. Use Azure Blob Storage resource to upload a file.

-

Click Next.

-

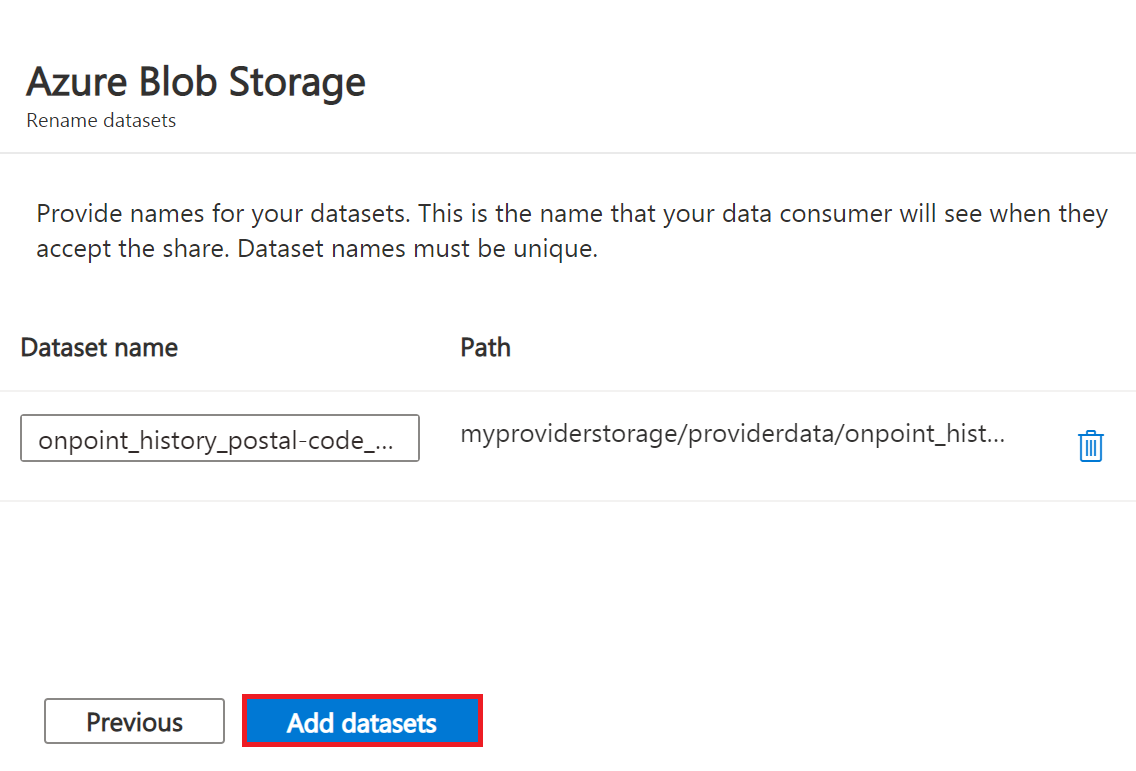

Enter a Dataset name that the consumer will see for the folder and dataset. We will use the default names but delete the providerdata folder this article. Click Add datasets.

-

Click Add datasets.

-

Click Continue.

-

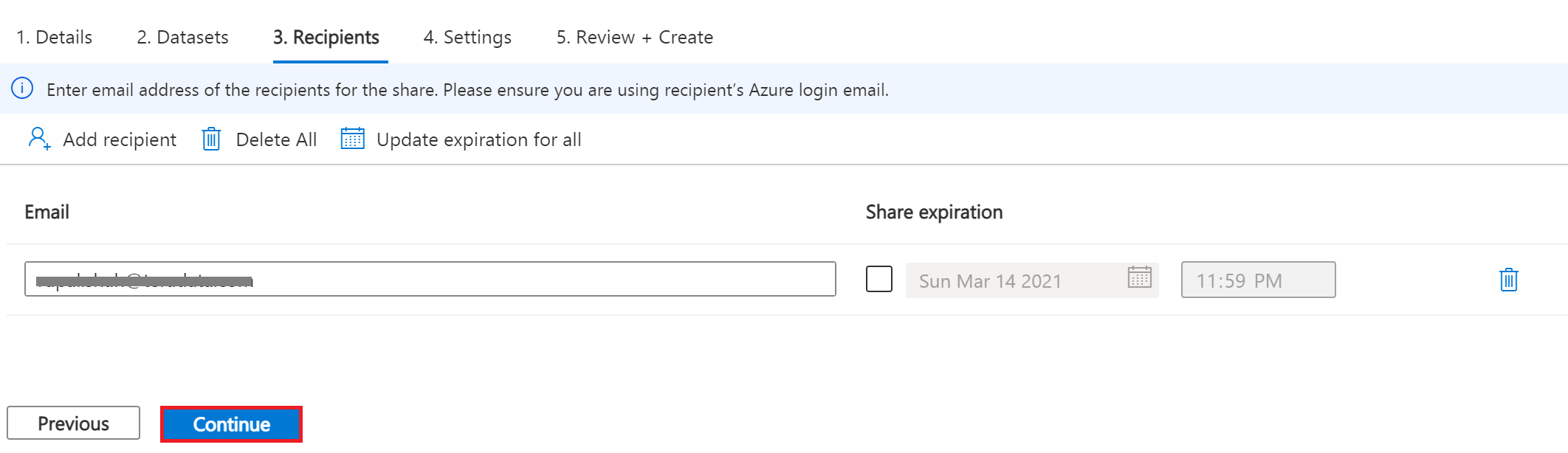

In Recipients tab, click Add recipient email address to send share notification.

-

Enter email address for consumer.

Set Share expiration for amount of time share is valid for consumer to accept.

-

Click Continue.

-

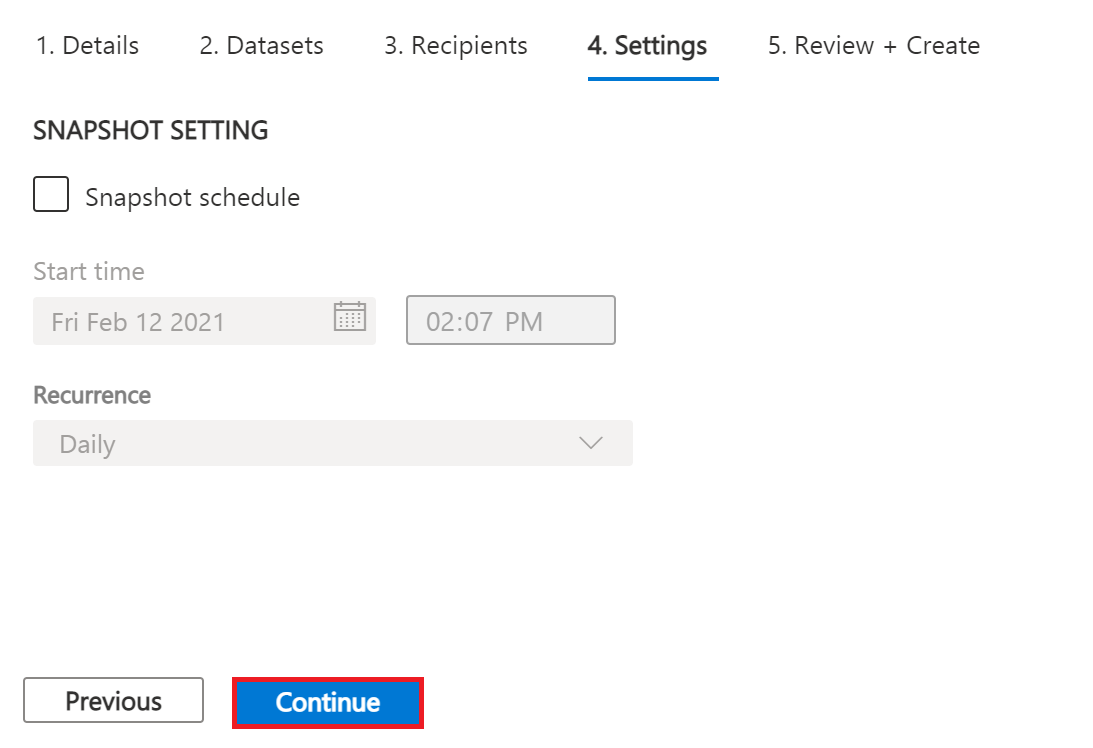

In Settings tab, set Snapshot schedule. We use default unchecked this article.

-

Click Continue.

-

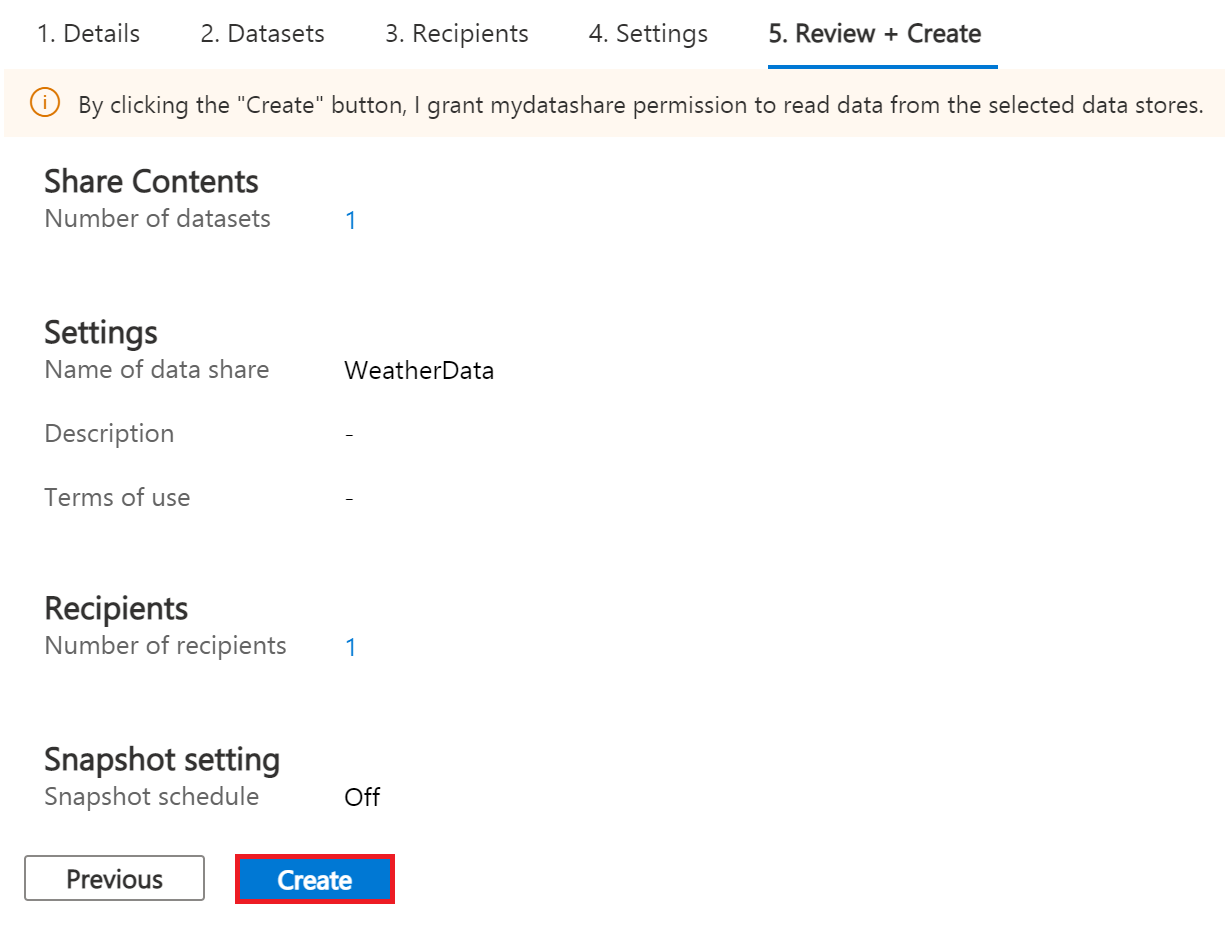

In Review + Create tab, click Create.

-

Your Azure Data Share has now been created and the recipient of your Data Share is now ready to accept your invitation.

Accept and Receive Data Using Azure Data Share

In this article, the recipient/consumer is going to receive the data into their Azure Blob storage account.

Similar to the Data Share Provider, ensure that all pre-requisites are complete for the Consumer before accepting a data share invitation.

- Azure Subscription: If you don't have one, create a +++free account+++ before you begin.

- Azure Blob Storage account and container: create resource group called myConsumerStorage_rg and create account name myconsumerstorage and container consumerdata.

- Azure Data Share account: create resource group called myDataShareConsumer_rg and create a data share account name called mydatashareconsumer to accept the data.

Follow the steps in Accept and receive data using Azure Data Share.

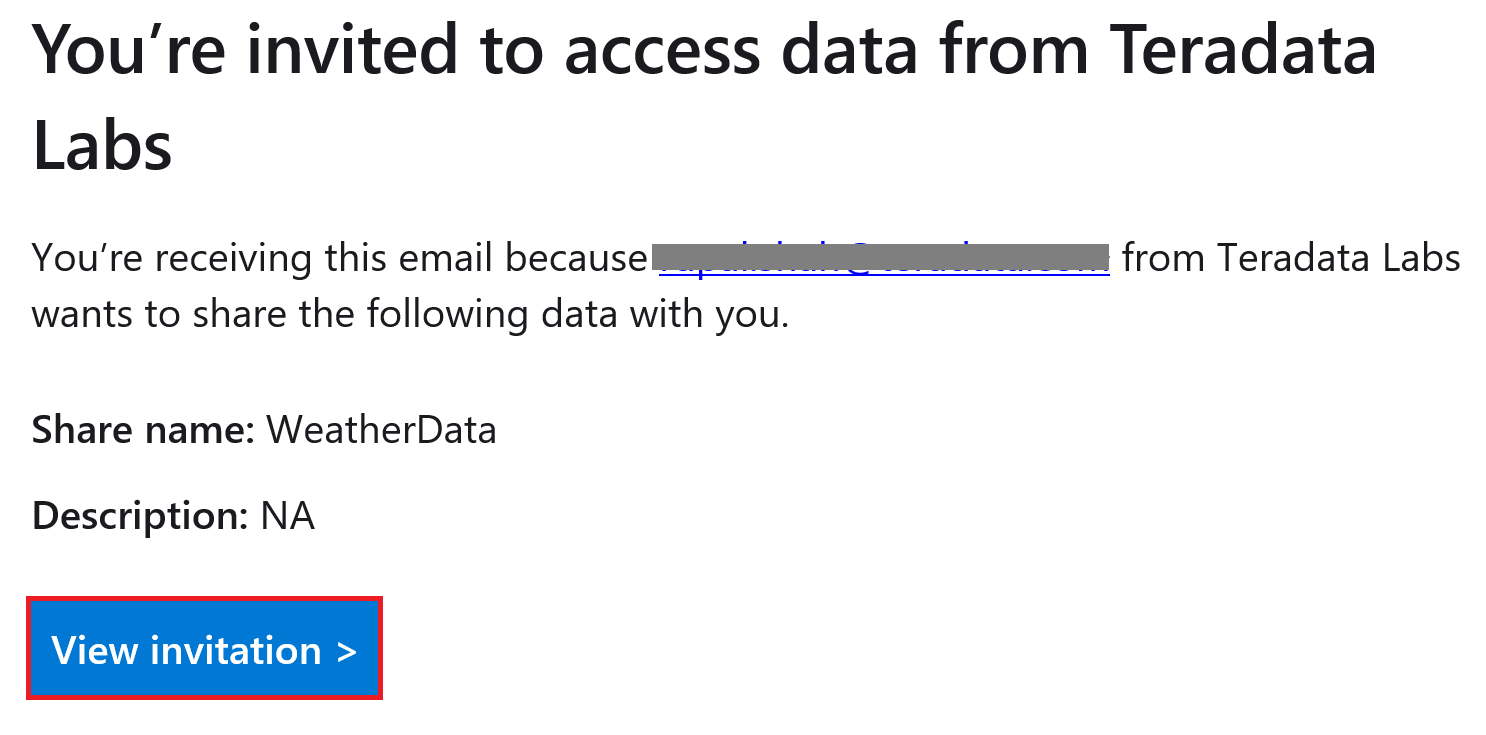

Open invitation

-

In your email, an invitation from Microsoft Azure with a subject titled "Azure Data Share invitation from +++yourdataprovider@domain.com+++. Click on the View invitation to see your invitation in Azure.

-

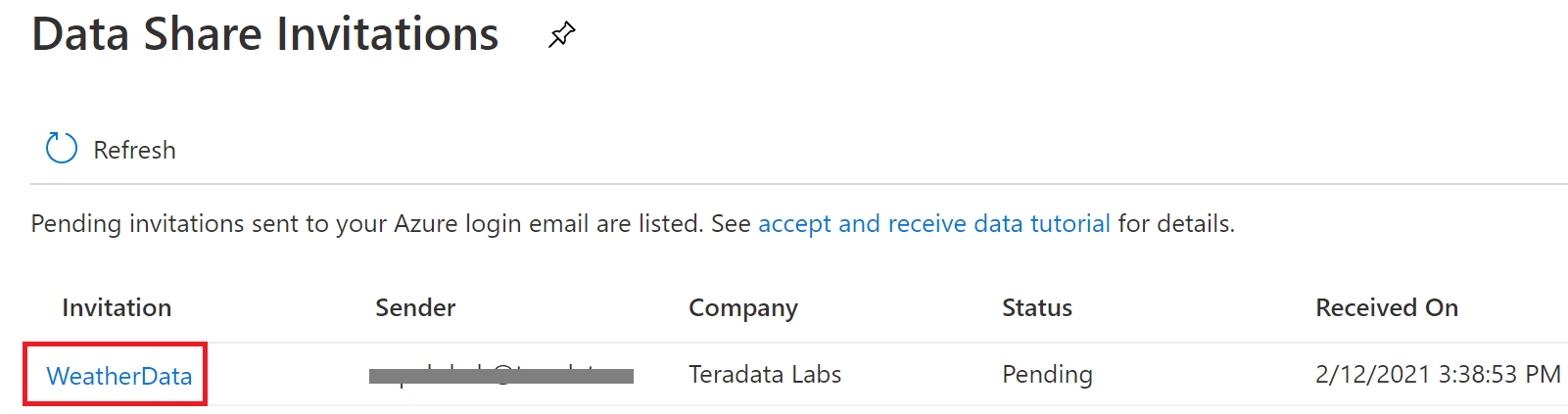

This action opens your browser to the list of Data Share invitations.

-

Select the share you would like to view. We will select WeatherData in this article.

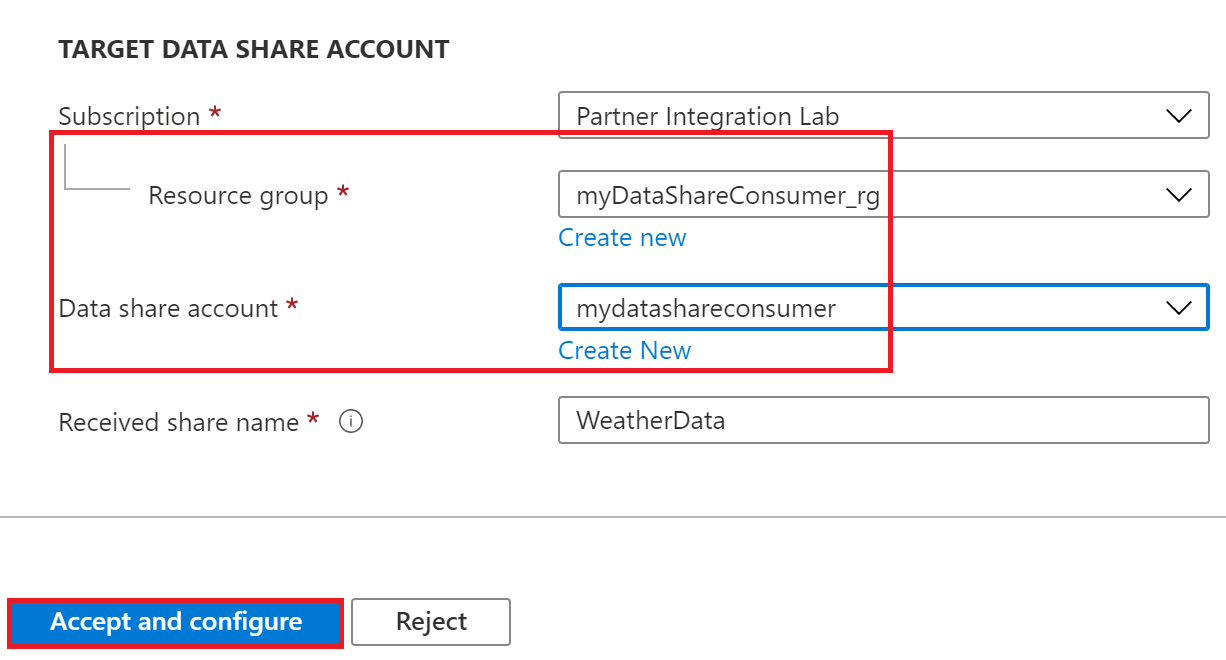

Accept invitation

- Under Target Data Share Account, select the Subscription and Resource Group that you would like to deployed your Data Share into or you can create a new Data Share here.

if provider required a Terms of Use acceptance, a dialog box would appear and you'll be required to check the box to indicate you agree to the terms of use.

-

Enter the Resource group and Data share account. We will use myDataShareConsumer_rg and mydatashareconsumer account this article.

-

Select Accept and configure and a share subscription will be created.

Configure received share

-

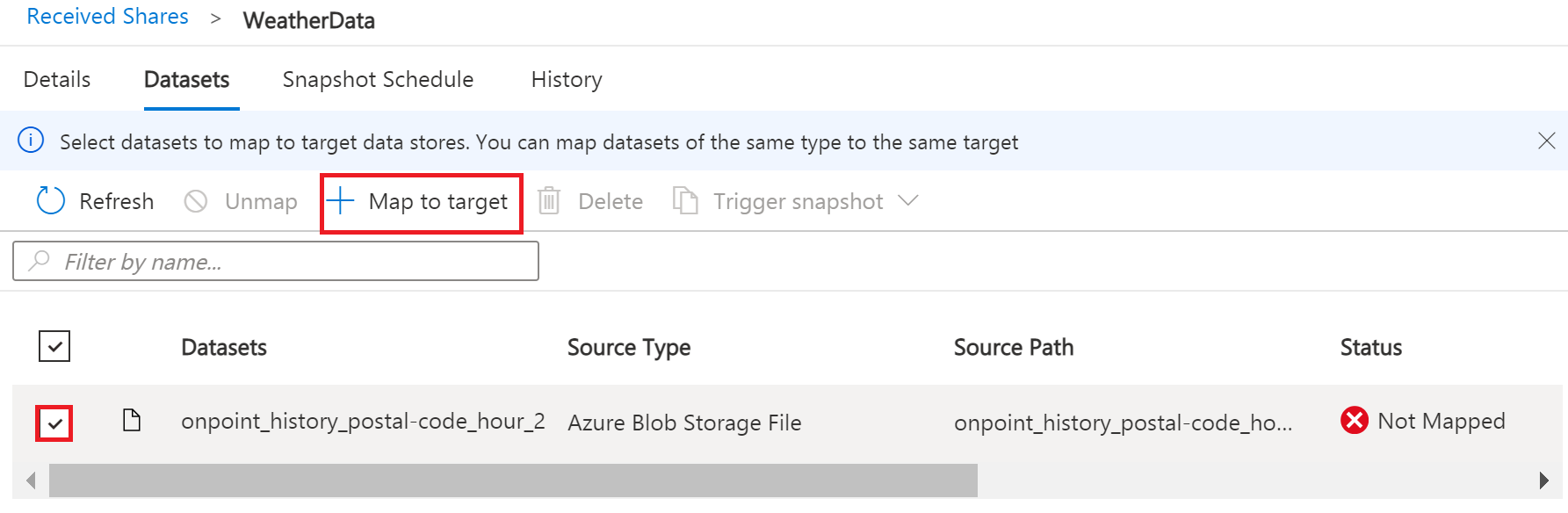

Select Datasets tab. Check the box next to the dataset you'd like to assign a destination to. Select + Map to target to choose a target data store.

-

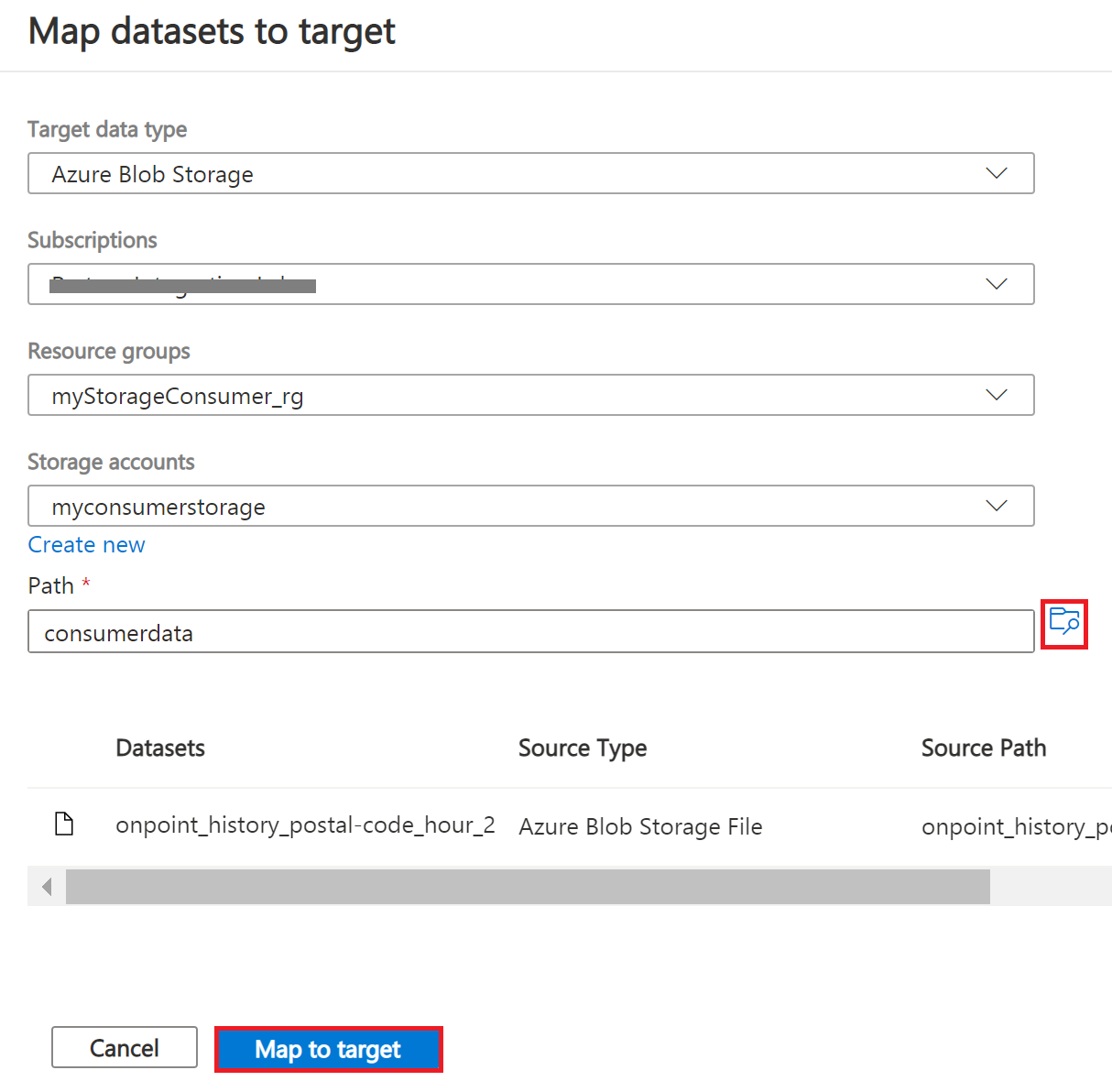

Select a target data store type and path that you'd like the data to land in. We will use consumers Azure Blob Storage account myconsumerstorage and container consumerdata for our snapshot example in this article.

Azure Data Share provides open and flexible data sharing, including the ability to share from and to different data stores. Check supported data sources that can accept snapshot and in place sharing.

-

Click on Map to target.

-

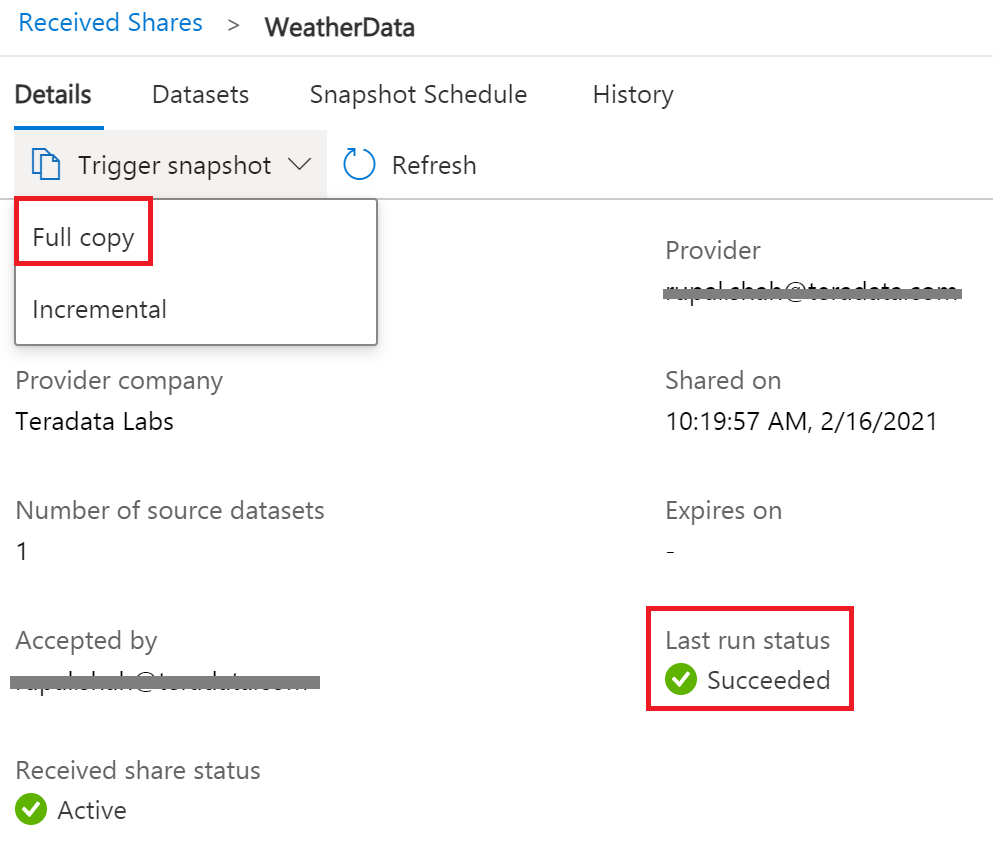

Once mapping is complete, for snapshot-based sharing click on Details tab and click Trigger snapshot for Full or Incremental. We will select full copy since this is your first time receiving data from your provider.

-

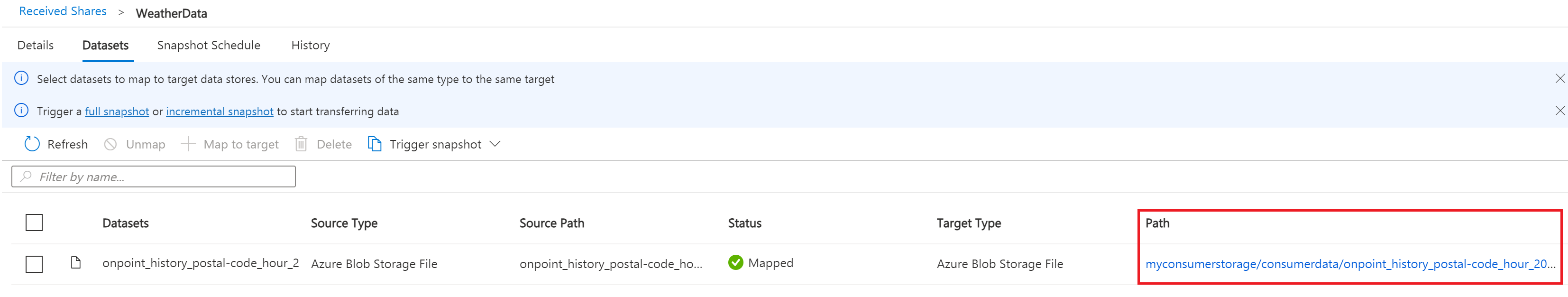

When the last run status is successful, go to target data store to view the received data. Select Datasets, and click on the link in the Target Path.

Configure NOS Access to Azure Blob Storage

Native Object Store (NOS) can directly read data in Azure Blob Storage, which allows you to explore and analyze data in Blob Storage without explicitly loading the data.

Create a foreign table definition

A foreign table definition allows data in Blob Storage to be easily referenced within the Advanced SQL Engine and makes the data available in a structured, relational format.

NOS supports data in CSV, JSON, and Parquet formats.

-

Login to your Vantage system with Teradata Studio.

-

Create an AUTHORIZATION object to access your Blob Storage container with the following SQL command.

** Replace the string for USER with your Storage Account Name. ** Replace the string for PASSWORD with your Storage Account Access Key or SAS Token.

- Create a foreign table definition for the CSV file on Blob Storage with the following SQL command.

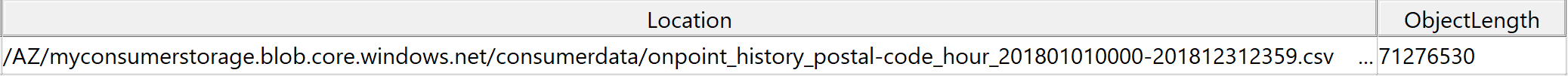

At a minimum, the foreign table definition must include a table name (WeatherData) and a location clause, which points to the object store data.

The LOCATION requires a storage account name and container name. You will need to replace this with your own storage account and container name.

If the object doesn't have a standard extension (e.g. “.json”, “.csv”, “.parquet”), then the Location…Payload columns definition phrase is also needed, and the LOCATION phase need to include the file name. For example: LOCATION (AZ/<storage account name>.blob.core.windows.net/<container>/<filename>).

Foreign tables are always defined as No Primary Index (NoPI) tables.

Query the Dataset in Azure Blob Storage

Run the following SQL command to query the dataset.

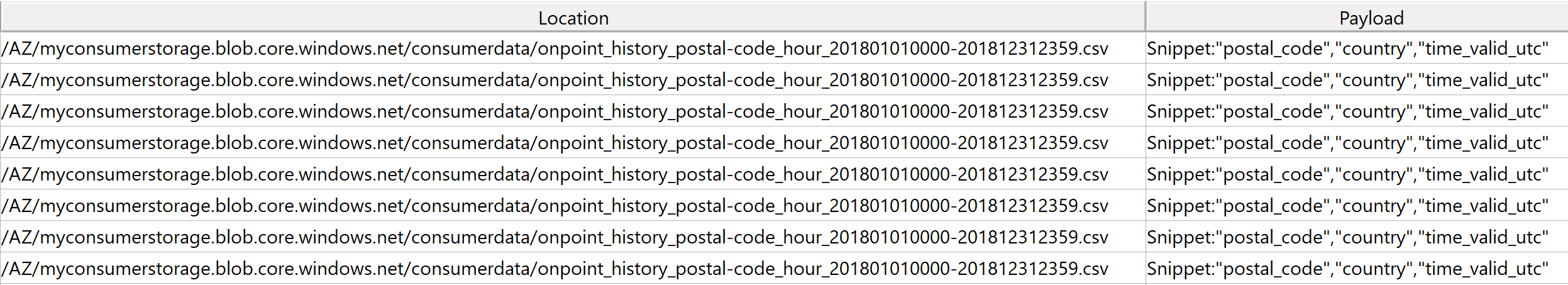

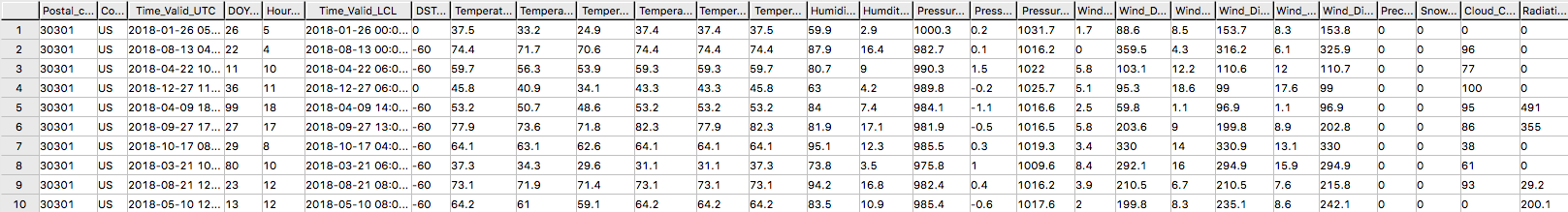

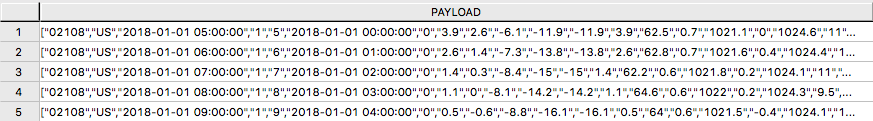

The foreign table only contains two columns: Location and Payload. Location is the address in the object store system. The data itself is represented in the payload column, with the payload value within each record in the foreign table representing a single CSV row.

Run the following SQL command to focus on the data in the object.

Create a View

Views can simplify the names associated with the payload attributes, can make it easier to code SQL against the object data, and can hide the Location references in the foreign table.

Vantage foreign tables use the .. (double dot or double period) operator to separate the object name from the column name.

- Run the following SQL command to create a view.

- Run the following SQL command to validate the view.

Now that you have created a view, you can easily reference the object store data in a query and combine it with other tables, both relational tables in Vantage as well as foreign tables in an object store. This allows you to leverage the full analytic capabilities of Vantage on 100% of the data, no matter where the data is located.

Load Data from Blob Storage into Vantage (optional)

Having a persistent copy of the Blob Storage data can be useful when repetitive access of the same data is expected. NOS does not automatically make a persistent copy of the Blob Storage data. Each time you reference a foreign table, Vantage will fetch the data from Blob Storage. (Some data may be cached, but this depends on the size of the data in Blob Storage and other active workloads in Vantage.)

In addition, you may be charged network fees for data transferred from Blob Storage. If you will be referencing the data in Blob Storage multiple times, you may reduce your cost by loading it into Vantage, even temporarily.

You can select among the approaches below to load the data into Vantage.

Create the table and load the data in a single statement

You can use a single statement to both create the table and load the data. You can choose the desired attributes from the foreign table payload and what they will be called in the relational table.

A CREATE TABLE AS … WITH DATA statement can be used with the foreign table definition as the source table.

- Run the following SQL command to create the relational table and load the data.

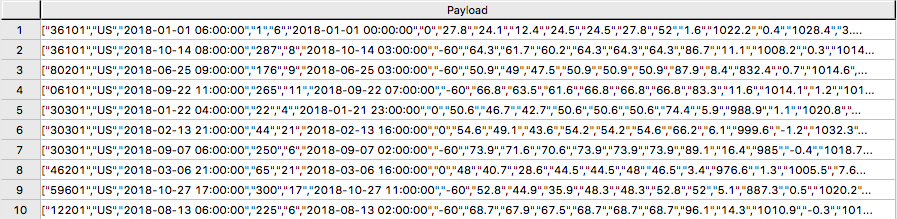

- Run the following SQL command to validate the contents of the table.

[Weather data,width=624,height=87]

[Weather data,width=624,height=87]

Create the table and load the data in multiple statements

You can also use multiple statements to first create the relational table and then load the data. An advantage of this choice is that you can perform multiple loads, possibly selecting different data or loading in smaller increments if the object is very large.

- Run the following SQL command to create the relational table.

- Run the following SQL to load the data into the table.

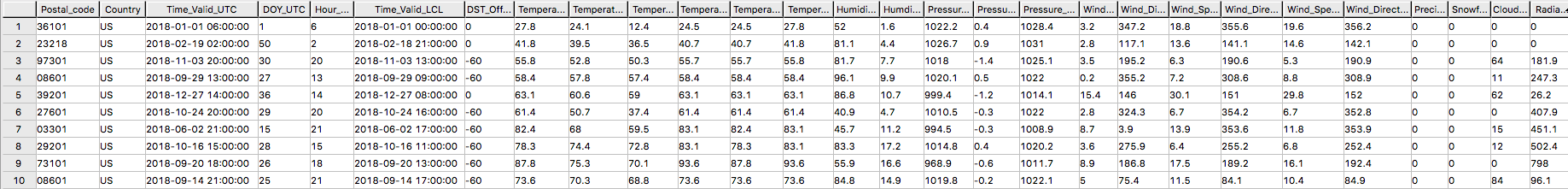

- Run the following SQL command to validate the contents of the table.

[WeatherData_temp,width=624,height=84]

[WeatherData_temp,width=624,height=84]

READ_NOS - An alternative method to foreign tables

An alternative to defining a foreign table is to use the READ_NOS table operator. This table operator allows you to access data directly from an object store without first creating a foreign table, or to view a list of the keys associated with all the objects specified by a Location clause.

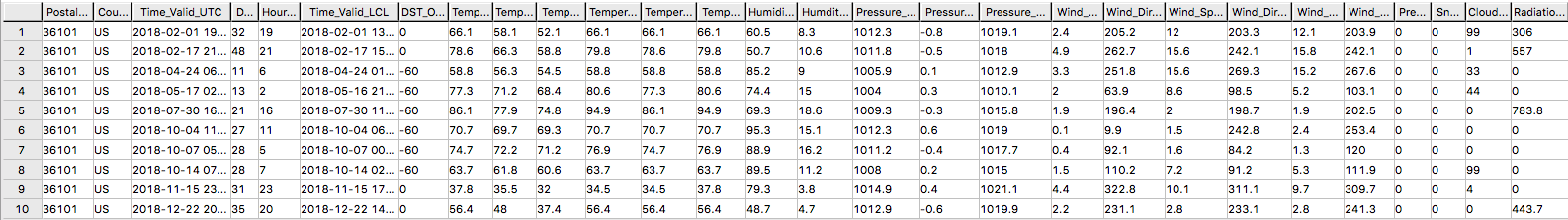

You can use the READ_NOS table operator to explore the data in an object.

- Run the following command to explore the data in an object.

- The LOCATION requires a storage account name and container name. This is highlighted above in yellow. You will need to replace this with your own storage account and container name.

- Replace the string for ACCESS_ID with your Storage Account Name.

- Replace the string for ACCES_KEY with your Storage Account Access Key or SAS Token

[READ_NOS,width=624,height=86]

[READ_NOS,width=624,height=86]

You can also leverage the READ_NOS table operator to get the length (size) of the object.

- Run the following SQL command to view the size of the object.

- Replace the values for LOCATION, ACCESS_ID, and ACCESS_KEY.

You can substitute the NOS_READ table operator for a foreign table definition in the above section for loading the data into a relational table.

If you have any questions or need further assistance, please visit our community forum where you can get support and interact with other community members.